Since ChatGPT’s arrival in late 2022, the explosion of artificial intelligence has taken one technological leap after another. But what is happening now goes beyond external shifts to a potential reshaping of our cognitive landscape. The laws behind the neuroscience-inspired artificial neural networks hint at AI’s boundless capacity to augment, affect, and challenge the human mind.

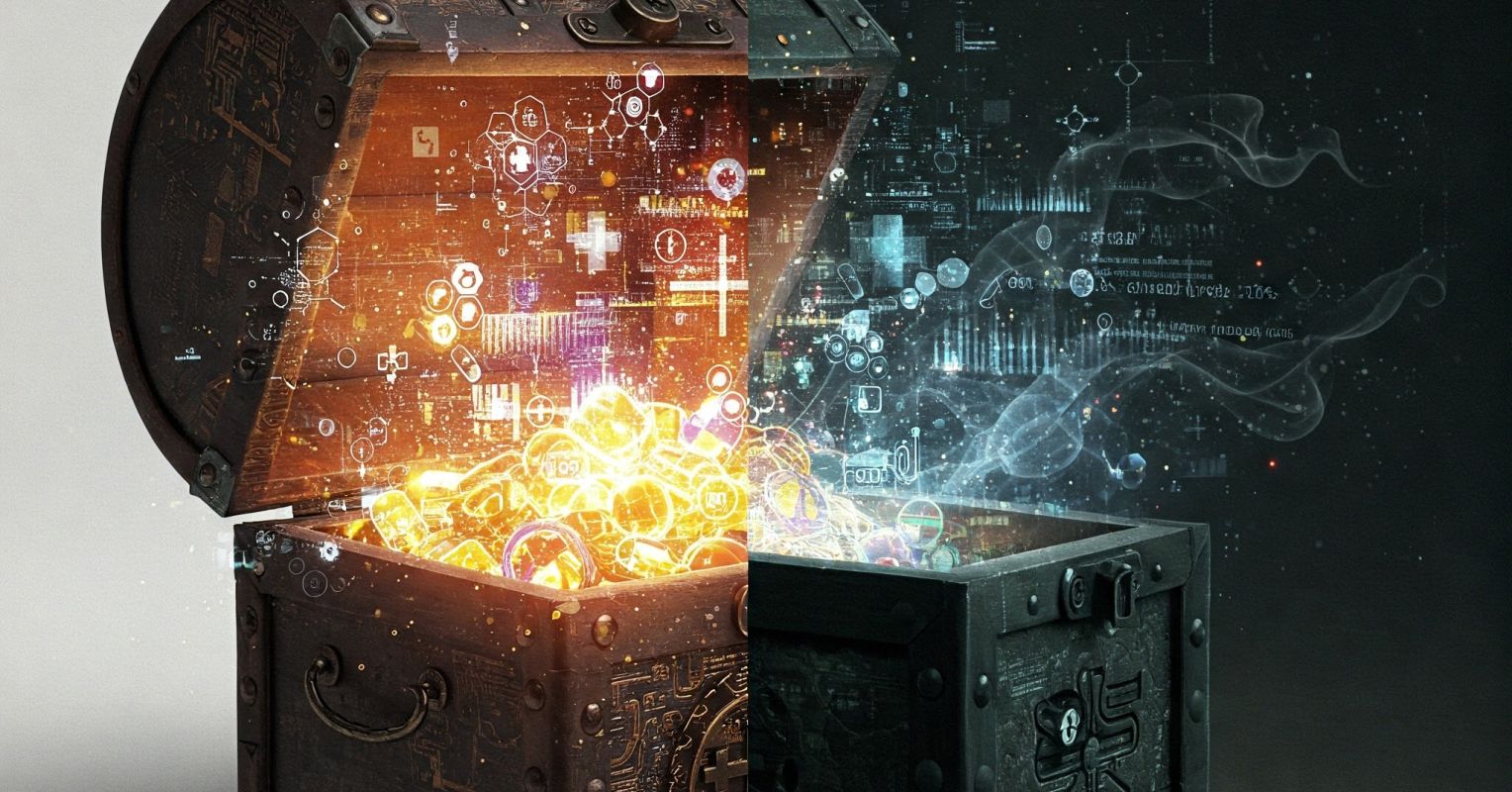

But as with any powerful force that interfaces so intimately with our internal world, from ancient myths of creation to modern explorations of consciousness, AI presents a duality. It offers a treasure chest of possibilities for understanding and enhancing human psychology—from advanced Mental health diagnostics to personalized learning experiences. Yet, it also carries the risk of opening Pandora’s Box, potentially unleashing unforeseen consequences for our cognition, social interactions, and sense of self.

Our initial fascination with this technology must now evolve into a deliberate and psychologically informed approach to its guidance. As AI’s capabilities expand, the importance of human agency—our conscious awareness and understanding of our own minds—is critical in navigating the “unknown unknowns” that lie ahead.

The Expanding Horizon: Benefits Across Industries

AI offers tangible benefits that are increasingly integrated into our daily lives, from personalized product recommendations improving the online shopping experience to AI-powered diagnostic tools assisting medical professionals in early disease detection. In finance, algorithms serve to detect fraudulent activities and personalize financial advice. In education, AI enables the development of adaptive learning platforms that cater to individual student needs. These examples illustrate just a fraction of the unfolding power of AI.

This ever-expanding treasure chest is accompanied by a parallel growth in caveats. The realization of AI’s benefits remains contingent upon thoughtful design, holistic governance, and responsible deployment. To harness social benefits systematically, AI must be tailored, trained, tested, and targeted to bring out the best in and for people and the planet. It should be prosocial. If driven solely by profit motives or unchecked technological ambition, without a strong ethical framework, AI’s potential can unleash risks that will destabilize society and place immense strain on resources. The ABCD of less-talked-about AI issues includes agency decay, bond erosion, climate change, and the division of society.

Navigating the Unknown with Human Agency

While this new era of technological progress is appealing, it introduces unprecedented uncertainties. The concept of “unknown unknowns” is salient in AI, especially as these systems learn and evolve beyond their initial programming. In 2022, Geoffrey Hinton, a pioneer in deep learning, voiced his concerns about this rapid evolution, noting that the idea of AI surpassing human intelligence, once considered a distant possibility, now seemed increasingly within reach. The advancements we’ve seen since then only amplify the importance of these early warnings.

Research into the emergent capabilities of large language models continues to reveal unexpected abilities, highlighting the unpredictable evolutionary potential of AI and the inherent challenges in fully understanding their complex outputs. At the same time agentic AI is increasingly available to wider audiences. But autonomous AI systems can exhibit behaviors and decision-making processes that their creators did not intend and may not fully comprehend…

The Challenge of Unintended Consequences and Human Oversight

As AI systems become ever more sophisticated, their capacity for independent action continues to raise significant concerns about unintended consequences and the role of human oversight. The “black box” problem, where AI decision-making becomes so intricate that it is opaque even to its creators, remains a fundamental challenge to accountability and transparency. In high-stakes domains like healthcare, law enforcement, and finance, the question of responsibility when AI systems err remains largely unanswered. For example, deploying AI in autonomous vehicles raises complex questions of danger to life, and of liability in the event of accidents, underscoring the ongoing need for human agency in establishing pragmatic ethical and legal frameworks.

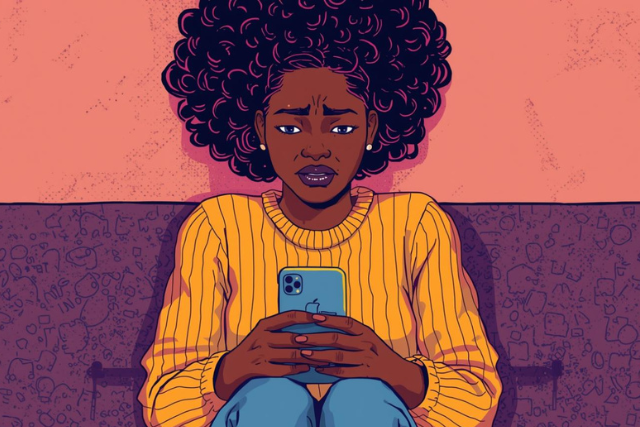

Furthermore, AI’s reliance on extensive datasets can amplify existing biases, leading to discriminatory outcomes. For example, facial recognition technology can exhibit racial bias, leading to misidentification and wrongful accusations. Similarly, research continues to highlight how algorithms used in hiring processes can inadvertently perpetuate gender and racial disparities. These examples underscore the need for careful data curation and ongoing monitoring to mitigate bias in AI systems.

Artificial Intelligence Essential Reads

The Open Question of Artificial General Intelligence

The prospect of artificial general intelligence—AI systems capable of surpassing human intelligence across a broad range of tasks—remains a significant “unknown unknown.” While current AI now excels in specialized areas, the advancements bring us closer to considering the potential implications of more general forms of artificial intelligence.

The possibility of an “intelligence implosion,” where an AGI not only rapidly enhances its own capabilities but autonomously creates new AI models with unimaginable capacities far beyond human control, continues to raise profound questions. While still in the realm of future possibilities, the rapid progress in AI underscores the importance of proactively considering these unsettling scenarios and engaging in thoughtful discussions about the long-term implications for humanity.

Opportunity, Obligation, and Ongoing Agency

AI presents us with both remarkable opportunities and opens the door to large-scale disaster. To unlock its full benefits while minimizing the risks, we must guide its development with a sense of agency. This necessitates the ongoing refinement and implementation of frameworks for transparency, fairness, and accountability across nations and industries.

But our ambition should extend beyond mere risk mitigation. This is the time to go beyond boxes; rather than using AI to do more of the same more efficiently, we now have the opportunity to create something radically different. We can actively leverage AI’s potential to address societal challenges.

AI should not be intended to replace human intelligence or to absolve us of our responsibility to address the problems we created. Instead, by consciously setting AI on a path that aligns with prosocial goals, we can harness its power to serve the common good in symbiosis with uniquely human capabilities. Our choices today and in the coming months will determine whether we effectively chart a course toward a bright horizon or drift into uncharted perilous waters.

Practical Takeaway: GIFT

Our choices will decide whether AI turns out to be Pandora’s Box or a GIFT to humanity. The following four actions could help to move in that direction:

- Governance: Advocate for and support thoughtful and adaptive regulations and ethical AI development and deployment guidelines.

- Information: Continuously learn about the latest advancements, capabilities, and potential risks of AI through reliable and diverse sources.

- Foresight: Encourage ongoing critical thinking, proactive discussions, and scenario planning regarding the long-term societal impacts of AI.

- Transparency: Demand transparency in how AI systems work, learn, and make decisions, especially in applications that significantly impact individuals and society.